Publications

- Selected Papers only

- All Papers (by year)

- AI (Artificial Intelligence)

- Robotics

- Vision

-

Wikipedia for Robots. A View from Ashutosh Saxena. In MIT Technology Review about one of ten innovative technologies in 2016. [article]

@inproceedings{wikipediaforrobots-saxena-2016,

title={Wikipedia for Robots},

author={Ashutosh Saxena},

year={2016},

booktitle={MIT Technoogy Review}

}Humans have gained a lot of value by organizing all their knowledge and making it widely accessible—in textbooks, libraries, Wikipedia, and YouTube, to name a few examples.

However, the organized collections of knowledge that work for humans aren’t so great for robots. A robot wouldn’t get much useful information if it queried a search engine for how to “bring sweet tea from the kitchen.” Robots require something different—access to finer details for planning, control, and natural language understanding. -

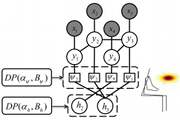

Recurrent Neural Networks for Driver Activity Anticipation via Sensory-Fusion Architecture. Ashesh Jain, Avi Singh, Hema S Koppula, Shane Soh, and Ashutosh Saxena. In International Conference on Robotics and Automation (ICRA), 2016. [project page, arxiv]

@inproceedings{rnn-brain4cars-saxena-2016,

title={Recurrent Neural Networks for Driver Activity Anticipation via Sensory-Fusion Architecture},

author={Ashesh Jain and Avi Singh and Hema S Koppula and Shane Soh and Ashutosh Saxena},

year={2016},

booktitle={International Conference on Robotics and Automation (ICRA)}

}Abstract: Anticipating the future actions of a human is a widely studied problem in robotics that requires spatio-temporal reasoning. In this work we propose a deep learning approach for anticipation in sensory-rich robotics applications. We introduce a sensory-fusion architecture which jointly learns to anticipate and fuse information from multiple sensory streams. Our architecture consists of Recurrent Neural Networks (RNNs) that use Long Short-Term Memory (LSTM) units to capture long temporal dependencies. We train our architecture in a sequence-to-sequence prediction manner, and it explicitly learns to predict the future given only a partial temporal context. We further introduce a novel loss layer for anticipation which prevents over-fitting and encourages early anticipation. We use our architecture to anticipate driving maneuvers several seconds before they happen on a natural driving data set of 1180 miles. The context for maneuver anticipation comes from multiple sensors installed on the vehicle. Our approach shows significant improvement over the state-of-the-art in maneuver anticipation by increasing the precision from 77.4% to 90.5% and recall from 71.2% to 87.4%.

-

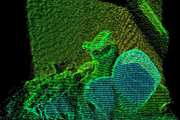

Deep Multimodal Embedding: Manipulating Novel Objects with Point-clouds, Language and Trajectories, Jaeyong Sung, Ian Lenz, and Ashutosh Saxena. International Conference on Robotics and Automation (ICRA), 2017. (finalist for ICRA Best cognitive robotics paper award) [PDF, arxiv, project page]

@inproceedings{robobarista_deepmultimodalembedding_2015,

title={Deep Multimodal Embedding: Manipulating Novel Objects with Point-clouds, Language and Trajectories},

author={Jaeyong Sung and Ian Lenz and Ashutosh Saxena},

year={2017},

booktitle={International Conference on Robotics and Automation (ICRA)}

}Abstract: A robot operating in a real-world environment needs to perform reasoning with a variety of sensing modalities. However, manually designing features that allow a learning algorithm to relate these different modalities can be extremely challenging. In this work, we consider the task of manipulating novel objects and appliances. To this end, we learn to embed point-cloud, natural language, and manipulation trajectory data into a shared embedding space using a deep neural network. In order to learn semantically meaningful spaces throughout our network, we introduce a method for pre-training its lower layers for multimodal feature embedding and a method for fine- tuning this embedding space using a loss-based margin. We test our model on the Robobarista dataset, where we achieve significant improvements in both accuracy and inference time over the previous state of the art.

-

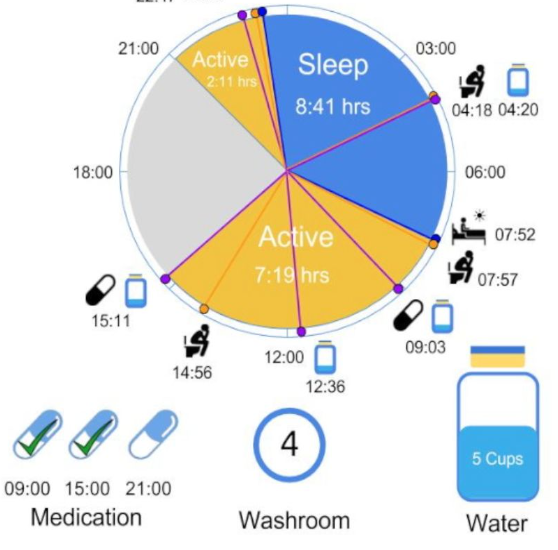

Home as a Caregiver: How AI-Enabled Apartments and Homes Can Change Senior Living. Caspar.AI. Senior Housing News, Jan 2021. [article]

@inproceedings{home-as-a-caregiver-2020,

title={Home as a Caregiver: How AI-Enabled Apartments and Homes Can Change Senior Living},

author={Caspar.AI},

year={2021},

booktitle={Senior Housing News}

}For senior housing, 2020 has been a year of challenges. Some were known entering the year, namely the staffing shortage. The one that has defined the year — the COVID-19 pandemic — was a surprise.

This leaves vulnerable seniors without the support they need, despite senior housing staff’s best efforts. To solve this, one technology innovator is taking the next step in senior housing of delivering artificial intelligence to homes: AI homes. These easy-to-install AI-enabled spaces address several of today’s senior living problems, creating a new living experience suited for 2021, with a return on investment crucial for bottom line health.

This white paper shows how Caspar.AI is delivering safety, wellness and increased work efficiency, while evolving community living from high-touch to touch-free — all in a package overwhelmingly popular with residents. In short, helping turn the home into another caregiver. -

Senior Living Communities: Made Safer by AI. Ashutosh Saxena, David Cheriton. Tech report, July 2020. (Top 100 AI by CB Insights) [article]

@inproceedings{safe-senior-living-2020,

title={Senior Living Communities: Made Safer by AI},

author={Ashutosh Saxena and David Cheriton},

year={2020},

booktitle={Tech report}

}There is a historically unprecedented shift in demographics towards seniors, which will result in significant housing development over the coming decade. This is an enormous opportunity for real-estate operators to innovate and address the demand in this growing market.

However investments in this area are fraught with risk. Seniors often have more health issues, and Covid-19 has exposed just how vulnerable they are – especially those living in close proximity. Conventionally, most services for seniors are “high-touch”, requiring close physical contact with trained caregivers. Not only are trained caregivers short in supply, but the pandemic has made it evident that conventional high-touch approaches to senior care are high-cost and greater risk. There are not enough caregivers to meet the needs of this emerging demographic, and even fewer who want to undertake the additional training and risk of working in a senior facility, especially given the current pandemic.

In this article, we rethink the design of senior living facilities to mitigate the risks and costs using automation. With AI-enabled pervasive automation, we claim there is an opportunity, if not an urgency, to go from high-touch to almost "no touch" while dramatically reducing risk and cost. Although our vision goes beyond the current reality, we cite measurements from Caspar-enabled senior properties that show the potential benefit of this approach. -

Privacy-Preserving Distributed AI for Smart Homes. Caspar AI with David Cheriton and Ken Birman. Tech report, 2020. [article]

@inproceedings{privacy-preserving-distributed-ai-2020,

title={Privacy-Preserving Distributed AI for Smart Homes},

author={Caspar AI and David Cheriton and Ken Birman},

year={2020},

booktitle={Tech report}

}Distributed AI (D-AI) is enabling rapid progress on smart IoT systems, homes, and cities. D-AI refers to any AI system with discrete AI subsystems that can be combined to create ensemble-intelligences. Here, we take the next step and introduce the concept of a Privacy-Preserving IoT Cloud (PPIC) optimized to host D-AI applications close to the devices. Caspar.ai, which adopts this approach, is currently providing cost-efficient smart- home solutions for multi-family residential communities.

-

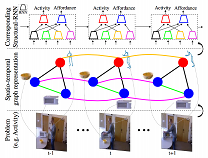

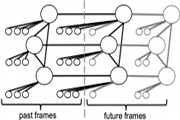

Structural-RNN: Deep Learning on Spatio-Temporal Graphs. Ashesh Jain, Amir R. Zamir, Silvio Savarese, and Ashutosh Saxena. In Computer Vision and Pattern Recognition (CVPR) oral, 2016. (best student paper) [PDF, project page, arxiv]

@inproceedings{rnn-brain4cars-saxena-2016,

title={Structural-RNN: Deep Learning on Spatio-Temporal Graphs},

author={Ashesh Jain and Amir R. Zamir and Silvio Savarese and Ashutosh Saxena},

year={2016},

booktitle={Computer Vision and Pattern Recognition (CVPR)}

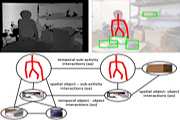

}Abstract: Deep Recurrent Neural Network architectures, though remarkably capable at modeling sequences, lack an intuitive high-level spatio-temporal structure. That is while many problems in computer vision inherently have an underlying high-level structure and can benefit from it. Spatio-temporal graphs are a popular flexible tool for imposing such high-level intuitions in the formulation of real world problems. In this paper, we propose an approach for combining the power of high-level spatio-temporal graphs and sequence learning success of Recurrent Neural Networks (RNNs). We develop a scalable method for casting an arbitrary spatio-temporal graph as a rich RNN mixture that is feedforward, fully differentiable, and jointly trainable. The proposed method is generic and principled as it can be used for transforming any spatio-temporal graph through employing a certain set of well defined steps. The evaluations of the proposed approach on a diverse set of problems, ranging from modeling human motion to object interactions, shows improvement over the state-of-the-art with a large margin. We expect this method to empower a new convenient approach to problem formulation through high-level spatio-temporal graphs and Recurrent Neural Networks, and be of broad interest to the community.

-

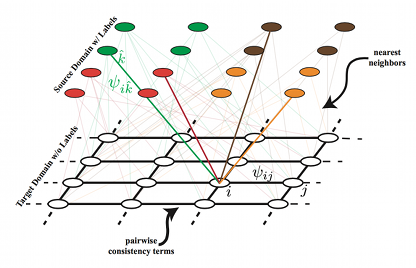

Learning Transferrable Representations for Unsupervised Domain Adaptation. Ozan Sener, Hyun Oh Song, Ashutosh Saxena, and Silvio Savarese. In Neural Information Processing Systems (NIPS) , 2016. [PDF]

@inproceedings{sener-saxena-transfer-nips-2016,

title={Learning Transferrable Representations for Unsupervised Domain Adaptation},

author={Ozan Sener and Hyun Oh Song and Ashutosh Saxena and Silvio Savarese},

year={2016},

booktitle={Neural Information Processing Systems (NIPS)}

}Abstract: Supervised learning with large scale labeled datasets and deep layered models has made a paradigm shift in diverse areas in learning and recognition. However, this approach still suffers generalization issues under the presence of a domain shift between the training and the test data distribution. In this regard, unsupervised domain adaptation algorithms have been proposed to directly address the domain shift problem. In this paper, we approach the problem from a transductive perspective. We incorporate the domain shift and the transductive target inference into our framework by jointly solving for an asymmetric similarity metric and the optimal transductive target label assignment. We also show that our model can easily be extended for deep feature learning in order to learn features which are discriminative in the target domain. Our experiments show that the proposed method significantly outperforms state-of-the-art algorithms in both object recognition and digit classification experiments by a large margin.

-

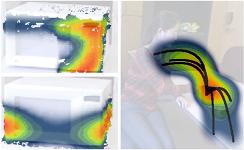

Watch-Bot: Unsupervised Learning for Reminding Humans of Forgotten Actions, Chenxia Wu, Jiemi Zhang, Bart Selman, Silvio Savarese, Ashutosh Saxena. In International Conference on Robotics and Automation (ICRA), 2016. [ArXiv PDF, PDF, journal version, project page]

@article{chenxiawu_watchnbots_icra2016,

title={Watch-Bot: Unsupervised Learning for Reminding Humans of Forgotten Actions},

author={Chenxia Wu and Jiemi Zhang and Bart Selman and Silvio Savarese and Ashutosh Saxena},

year={2016},

booktitle={International Conference on Robotics and Automation (ICRA)}

}We present a robotic system that watches a human using a Kinect v2 RGB-D sensor, detects what he forgot to do while performing an activity, and if necessary reminds the person using a laser pointer to point out the related object. Our simple setup can be easily deployed on any assistive robot.

Our approach is based on a learning algorithm trained in a purely unsupervised setting, which does not require any human annotations. This makes our approach scalable and applicable to variant scenarios. Our model learns the action/object co-occurrence and action temporal relations in the activity, and uses the learned rich relationships to infer the forgotten action and the related object. We show that our approach not only improves the unsupervised action segmentation and action cluster assignment performance, but also effectively detects the forgotten actions on a challenging human activity RGB-D video dataset. In robotic experiments, we show that our robot is able to remind people of forgotten actions successfully.

-

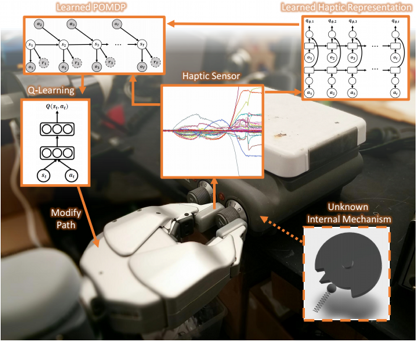

Learning to Represent Haptic Feedback for Partially-Observable Tasks, Jaeyong Sung, J. Kenneth Salisbury, Ashutosh Saxena. International Conference on Robotics and Automation (ICRA), 2017. (finalist for ICRA Best cognitive robotics paper award) [PDF]

@inproceedings{robobarista_haptic_2017,

title={Learning to Represent Haptic Feedback for Partially-Observable Tasks},

author={Jaeyong Sung and J. Kenneth Salisbury and Ashutosh Saxena},

year={2017},

booktitle={International Conference on Robotics and Automation (ICRA)}

}Abstract: coming soon

-

Robobarista: Learning to Manipulate Novel Objects via Deep Multimodal Embedding, Jaeyong Sung, Seok H Jin, Ian Lenz and Ashutosh Saxena. Cornell Tech Report, 2016. [ArXiv, project page]

@inproceedings{sung2016_robobarista_deepembedding,

title={Robobarista: Learning to Manipulate Novel Objects via Deep Multimodal Embedding},

author={Jaeyong Sung and Seok H Jin and Ian Lenz and Ashutosh Saxena},

year={2016},

booktitle={Cornell Tech Report}

}Abstract: There is a large variety of objects and appliances in human environments, such as stoves, coffee dispensers, juice extractors, and so on. It is challenging for a roboticist to program a robot for each of these object types and for each of their instantiations. In this work, we present a novel approach to manipulation planning based on the idea that many household objects share similarly-operated object parts. We formulate the manipulation planning as a structured prediction problem and learn to transfer manipulation strategy across different objects by embedding point-cloud, natural language, and manipulation trajectory data into a shared embedding space using a deep neural network. In order to learn semantically meaningful spaces throughout our network, we introduce a method for pre-training its lower layers for multimodal feature embedding and a method for fine-tuning this embedding space using a loss-based margin. In order to collect a large number of manipulation demonstrations for different objects, we develop a new crowd-sourcing platform called Robobarista. We test our model on our dataset consisting of 116 objects and appliances with 249 parts along with 250 language instructions, for which there are 1225 crowd-sourced manipulation demonstrations. We further show that our robot with our model can even prepare a cup of a latte with appliances it has never seen before.

-

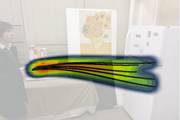

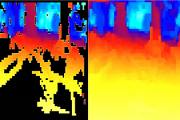

Brain4Cars: Car That Knows Before You Do via Sensory-Fusion Deep Learning Architecture, Ashesh Jain, Hema S Koppula, Shane Soh, Bharad Raghavan, Avi Singh, Ashutosh Saxena. Cornell Tech Report (journal version), Jan 2016. (Earlier presented at ICCV'15.) [Arxiv PDF, project+video]

@inproceedings{misra_sung_lee_saxena_ijrr2015_groundingnlp,

title="Brain4Cars: Car That Knows Before You Do via Sensory-Fusion Deep Learning Architecture",

author="Ashesh Jain and Hema S Koppula and Shane Soh and Bharad Raghavan and Avi Singh and Ashutosh Saxena",

year="2016",

booktitle="Cornell Tech Report",

}Abstract: Advanced Driver Assistance Systems (ADAS) have made driving safer over the last decade. They prepare vehicles for unsafe road conditions and alert drivers if they perform a dangerous maneuver. However, many accidents are unavoidable because by the time drivers are alerted, it is already too late. Anticipating maneuvers beforehand can alert drivers before they perform the maneuver and also give ADAS more time to avoid or prepare for the danger.

In this work we propose a vehicular sensor-rich platform and learning algorithms for maneuver anticipation. For this purpose we equip a car with cameras, Global Positioning System (GPS), and a computing device to capture the driving context from both inside and outside of the car. In order to anticipate maneuvers, we propose a sensory-fusion deep learning architecture which jointly learns to anticipate and fuse multiple sensory streams. Our architecture consists of Recurrent Neural Networks (RNNs) that use Long Short-Term Memory (LSTM) units to capture long temporal dependencies. We propose a novel training procedure which allows the network to predict the future given only a partial temporal context. We introduce a diverse data set with 1180 miles of natural freeway and city driving, and show that we can anticipate maneuvers 3.5 seconds before they occur in real-time with a precision and recall of 90.5% and 87.4% respectively. -

Tell Me Dave: Context-Sensitive Grounding of Natural Language to Manipulation Instructions, Dipendra K Misra, Jaeyong Sung, Kevin Lee, Ashutosh Saxena. International Journal of Robotics Research (IJRR), Jan 2016. (Earlier presented at RSS'14.) [PDF, project+video]

@inproceedings{misra_sung_lee_saxena_ijrr2015_groundingnlp,

title="Tell Me Dave: Context-Sensitive Grounding of Natural Language to Manipulation Instructions",

author="Dipendra K Misra and Jaeyong Sung and Kevin Lee and Ashutosh Saxena",

year="2016",

booktitle="International Journal of Robotics Research (IJRR)",

}Abstract: It is important for a robot to be able to interpret natural language commands given by a human. In this paper, we consider performing a sequence of mobile manipulation tasks with instructions described in natural language (NL). Given a new environment, even a simple task such as of boiling water would be performed quite differently depending on the presence, location and state of the objects. We start by collecting a dataset of task descriptions in free-form natural language and the corresponding grounded task-logs of the tasks performed in an online robot simulator. We then build a library of verb-environment-instructions that represents the possible instructions for each verb in that environment - these may or may not be valid for a different environment and task context.

We present a model that takes into account the variations in natural language, and ambiguities in grounding them to robotic instructions with appropriate environment context and task constraints. Our model also handles incomplete or noisy NL instructions. Our model is based on an energy function that encodes such properties in a form isomorphic to a conditional random field. We evaluate our model on tasks given in a robotic simulator and show that it successfully outperforms the state-of-the-art with 61.8% accuracy. We also demonstrate several of grounded robotic instruction sequences on a PR2 robot through Learning from Demonstration approach. -

Learning from Large Scale Visual Data for Robots, Ozan Sener. PhD Thesis, Cornell University, 2016. [PDF, webpage]

@phdthesis{ozansener_phdthesis,

title={Learning from Large Scale Visual Data for Robots},

author={Ozan Sener},

year={2016},

school={Cornell University}

}Abstract: Humans created a tremendous value by collecting and organizing all their knowl- edge in publicly accessible forms, as in Wikipedia and YouTube. The availability of such large knowledge-bases not only changed the way we learn, it also changed how we design artificial intelligence algorithms. Recently, propelled by the avail- able data and expressive models, many successful computer vision and natural language processing algorithms have emerged. However, we did not see a simi- lar shift in robotics. Our robots are still having trouble recognizing basic objects, detecting humans, and even performing simple tasks like how to make an omelette.

In this thesis, we study the type of knowledge robots need. Our initial anal- ysis suggests that robots need a very unique type of knowledge base with many requirements like multi-modal data and physical grounding of concepts. We fur- ther design such a large-scale knowledge base and show how can it be used in many robotics tasks. Given this knowledge base, robots need to handle many challenges like scarcity of the supervision and the shift between different modali- ties and domains. We propose machine learning algorithms which can handle lack of supervision and domain shift relying only on the latent structure of the available knowledge. Although we have large-scale knowledge, it is still missing some of the cases robots can encounter. Hence, we also develop algorithms which can explic- itly model, learn and estimate the uncertainty in the robot perception again using underlying latent structure. Our algorithms show state-of-the-art performance in many robotics and computer vision benchmarks. -

Learning from Natural Human Interactions for Assistive Robots, Ashesh Jain. PhD Thesis, Cornell University, 2016. [PDF, webpage]

@phdthesis{ashesh_phdthesis,

title={Learning from Natural Human Interactions for Assistive Robots},

author={Ashesh Jain},

year={2016},

school={Cornell University}

}Abstract: Leveraging human knowledge to train robots is a core problem in robotics. In the near future we will see humans interacting with agents such as, assistive robots, cars, smart houses, etc. Agents that can elicit and learn from such interactions will find use in many applications. Previous works have proposed methods for learning low-level robotic controls or motion primitives from (near) optimal human signals. In many applications such signals are not naturally available. Furthermore, optimal human signals are also difficult to elicit from non-expert users at a large scale.

Understanding and learning user preferences from weak signals is therefore of great emphasis. To this end, in this dissertation we propose interactive learning systems which allow robots to learn by interacting with humans. We develop interaction methods that are natural to the end-user, and algorithms to learn from sub-optimal interactions. Furthermore, the interactions between humans and robots have complex spatio-temporal structure. Inspired by the recent success of powerful function approximators based on deep neural networks, we propose a generic framework for modeling interactions with structure of Recurrent Neural Networks. We demonstrate applications of our work on real-world scenarios on assistive robots and cars. This work also established state-of-the-art on several existing benchmarks. -

Learning from Natural Human Interactions for Assistive Robots, Jaeyong Sung. PhD Thesis, Cornell University, 2017. [PDF, webpage]

@phdthesis{jaeyongsung_phdthesis,

title={Learning to Manipulate Novel Objects for Assistive Robots},

author={Jaeyong Sung},

year={2017},

school={Cornell University}

}Abstract: coming soon

-

Unsupervised Structured Learning of Human Activities for Robot Perception, Chenxia Wu. PhD Thesis, Cornell University, 2016. [PDF, webpage]

@phdthesis{ozansener_phdthesis,

title={Unsupervised Structured Learning of Human Activities for Robot Perception},

author={Chenxia Wu},

year={2016},

school={Cornell University}

}Abstract: Despite structure models in the supervised settings have been well studied and widely used in different domains, discovering latent structures is still a challenging problem in the unsupervised learning. The existing works usually require more independence assumptions. In this work, we propose unsuper- vised structured learning models including causal topic model, fully connected CRF autoencoder, which have the ability of modeling more complex relations with less independence. We also design efficient learning and inference opti- mizations that makes the computations still tractable. As a result, we make more flexible and accurate robot perceptions in more interesting applications.

We first note that modeling the hierarchical semantic relations of objects as well as how objects are interacting with humans is very important to making flexible and reliable robotic perception. So we propose a hierarchical semantic labeling algorithm to producing scene labels in different levels of abstraction for specific robot tasks. We also propose an unsupervised learning algorithms to leverage the interactions between humans and objects, so that it automatically discovers the useful common object regions from a set of images.

Second, we note that it is important for a robot to be able to detect not only what a human is currently doing but also more complex relations such as action temporal relations, human-object relations. Then the robot is able to achieve better perception performance and more flexible tasks. So we propose a causal topic model to incorporating both short-term and long-term temporal relations between human actions as well as human-object relations, and we develop a new robotic system thats watches not only what a human is currently doing but also what he forgot to do, and if necessary reminds the person.

In the domain of human activities and environments, we show how to build models that can learn the semantic, spatial and temporal structures in the unsu-pervised setting. We show that these approaches are useful in multiple domains including robotics, object recognition, human activity modeling, image/video data mining, visual summarization. Since our techniques are unsupervised and structured modeled, they are easily extended and scaled to other areas, such as natural language processing, robotic planning/manipulation, or multimedia analysis, etc. -

MDPs with Unawareness in Robotics. Nan Rong, Joseph Halpern, and Ashutosh Saxena. In Uncertainty in Artificial Intelligence (UAI), 2016. [pdf]

@inproceedings{mdpu-robotics-2016,

title={MDPs with Unawareness in Robotics},

author={Nan Rong and Joseph Halpern and Ashutosh Saxena},

year={2016},

booktitle={Uncertainty in Artificial Intelligence (UAI)}

}We formalize decision-making problems in robotics and automated control using continuous MDPs and actions that take place over continuous time intervals. We then approximate the continuous MDP using finer and finer discretizations. Doing this results in a family of systems, each of which has an extremely large action space, although only a few actions are "interesting". In this sense, we say the decision maker is unaware of which actions are “interesting”. This can be modeled using MDPUs - MDPs with unawareness - where the action space is much smaller. As we show, MDPUs can be used as a general framework for learning tasks in robotic problems. We prove results on the difficulty of learning a near-optimal policy in an an MDPU for a continuous task. We apply these ideas to the problem of having a humanoid robot learn on its own how to walk.

-

Human Centred Object Co-Segmentation. Chenxia Wu, Jiemi Zhang, Ashutosh Saxena, Silvio Savarese. Cornell Tech Report, 2016. [pdf coming soon, Arxiv]

@inproceedings{mdpu-robotics-2016,

title={Human Centred Object Co-Segmentation},

author={Chenxia Wu and Jiemi Zhang and Ashutosh Saxena and Silvio Savarese},

year={2016},

booktitle={Cornell Tech Report}

}Co-segmentation is the automatic extraction of the common semantic regions given a set of images. Different from previous approaches mainly based on object visuals, in this paper, we propose a human centred object co-segmentation approach, which uses the human as another strong evidence. In order to discover the rich internal structure of the objects reflecting their human-object interactions and visual similarities, we propose an unsupervised fully connected CRF auto-encoder incorporating the rich object features and a novel human-object interaction representation. We propose an efficient learning and inference algorithm to allow the full connectivity of the CRF with the auto-encoder, that establishes pairwise relations on all pairs of the object proposals in the dataset. Moreover, the auto-encoder learns the parameters from the data itself rather than supervised learning or manually assigned parameters in the conventional CRF. In the extensive experiments on four datasets, we show that our approach is able to extract the common objects more accurately than the state-of-the-art co-segmentation algorithms.

-

Understanding People from Visual Data for Assistive Robots, Hema S Koppula. PhD Thesis, Cornell University, 2015. [PDF, webpage]

@phdthesis{hema_phdthesis,

title={Understanding People from Visual Data for Assistive Robots},

author={Hema S Koppula},

year={2015},

school={Cornell University}

}Abstract: Understanding people in complex dynamic environments is important for many applications such as robotic assistants, health-care monitoring systems, self driving cars, etc. This is a challenging problem as human actions and in- tents are not always observable and often contain large amounts of ambiguity. Moreover, human environments are complex with lots of objects and many pos- sible ways of interacting with them. This leads to a huge variation in the way people perform various tasks.

The focus of this dissertation is to develop learning algorithms for under- standing people and their environments from RGB-D data. We address the problems of labeling environments, detecting past activities and anticipating what will happen in the future. In order to enable agents operating in human environments to perform holistic reasoning, we need to jointly model the hu- mans, objects and environments and capture the rich context between them.

We propose graphical models that naturally capture the rich spatio-temporal relations between human poses and objects in a 3D scene. We propose an effi- cient method to sample multiple possible graph structures and reason about the many alternate future possibilities. Our models also provide a functional rep- resentation of the environments, allowing agents to reactively plan their own actions to assist in the activities. We applied these algorithms successfully on our robot for performing various assistive tasks ranging from finding objects in large cluttered rooms to working alongside humans in collaborative tasks. -

Deep Learning for Robotics, Ian Lenz. PhD Thesis, Cornell University, 2015. [PDF, webpage]

@phdthesis{ianlenz_phdthesis,

title={Deep Learning for Robotics},

author={Ian Lenz},

year={Dec 2015},

school={Cornell University}

}Abstract: Robotics faces many unique challenges as robotic platforms move out of the lab and into the real world. In particular, the huge amount of variety encountered in real-world environments is extremely challenging for existing robotic control algorithms to handle. This necessistates the use of machine learning algorithms, which are able to learn controls given data. However, most conventional learning algorithms require hand-designed parameterized models and features, which are infeasible to design for many robotic tasks. Deep learning algorithms are general non-linear models which are able to learn features directly from data, making them an excellent choice for such robotics applications. However, care must be taken to design deep learning algorithms and supporting systems appropriate for the task at hand. In this work, I describe two applications of deep learning algorithms and one application of hardware neural networks to difficult robotics problems. The problems addressed are robotic grasping, food cutting, and aerial robot obstacle avoidance, but the algorithms presented are designed to be generalizable to related tasks.

-

Hallucinated Humans: Learning Latent Factors to Model 3D Environments, Yun Jiang. PhD Thesis, Cornell University, 2015. [PDF, webpage]

@phdthesis{yunjiang_phdthesis,

title={Hallucinated Humans: Learning Latent Factors to Model 3D Environments},

author={Yun Jiang},

year={2015},

school={Cornell University}

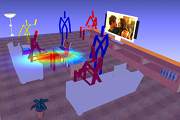

}Abstract: The ability to correctly reason human environment is critical for personal robots. For example, if a robot is asked to tidy a room, it needs to detect object types, such as shoes and books, and then decides where to place them properly. Some- times being able to anticipate human-environment interactions is also desirable. For example, the robot would not put any object on the chair if it understands that humans would sit on it.

The idea of modeling object-object relations has been widely leveraged in many scene understanding applications. For instance, the object found in front of a monitor is more likely to be a keyboard because of the high correlation of the two objects. However, as the objects are designed by humans and for human usage, when we reason about a human environment, we reason about it through an interplay between the environment, objects and humans. For example, the objects, monitor and keyboard, are strongly spatially correlated only because a human types on the keyboard while watching the monitor. The key idea of this thesis is to model environments not only through objects, but also through latent human poses and human-object interactions.

We start by designing a generic form of human-object interaction, also re- ferred as ‘object affordance’. Human-object relations can thus be quantified through a function of object affordance, human configuration and object con-figuration. Given human poses and object affordances, we can capture the rela- tions among humans, objects and the scene through Conditional Random Fields (CRFs). For scenarios where no humans present, our idea is to still leverage the human-object relations by hallucinating potential human poses.

In order to handle the large number of latent human poses and a large va- riety of their interactions with objects, we present Infinite Latent Conditional Random Field (ILCRF) that models a scene as a mixture of CRFs generated from Dirichlet processes. In each CRF, we model objects and object-object relations as existing nodes and edges, and hidden human poses and human-object rela- tions as latent nodes and edges. ILCRF generatively models the distribution of different CRF structures over these latent nodes and edges.

We apply the model to the challenging applications of 3D scene labeling and robotic scene arrangement. In extensive experiments, we show that our model significantly outperforms the state-of-the-art results in both applications. We test our algorithm on a robot for arranging objects in a new scene using the two applications aforementioned. We further extend the idea of hallucinating static human poses to anticipating human activities. We also present learning-based grasping and placing approaches for low-level manipulation tasks in compli- mentary to the high-level scene understanding tasks. -

Unsupervised Semantic Parsing of Video Collections, Ozan Sener, Amir Zamir, Silvio Savarese, and Ashutosh Saxena. In International Conference on Computer Vision (ICCV), 2015. [project page, arxiv, PDF]

@inproceedings{sener2015_unsupervisedvideo,

title={Unsupervised Semantic Parsing of Video Collections},

author={Ozan Sener and Amir Zamir and Silvio Savarese and Ashutosh Saxena},

year={2015},

booktitle={International Conference on Computer Vision (ICCV)}

}Abstract: Human communication typically has an underlying structure. This is reflected in the fact that in many user generated videos, a starting point, ending, and certain objective steps between these two can be identified. In this paper, we propose a method for parsing a video into such semantic steps in an unsupervised way. The proposed method is capable of providing a semantic “storyline” of the video composed of its objective steps. We accomplish this using both visual and language cues in a joint generative model. The proposed method can also provide a textual description for each of the identified semantic steps and video segments. We evaluate this method on a large number of complex YouTube videos and show results of unprecedented quality for this intricate and impactful problem.

-

Robobarista: Object Part based Transfer of Manipulation Trajectories from Crowd-sourcing in 3D Pointclouds, Jaeyong Sung, Seok H Jin, and Ashutosh Saxena. International Symposium on Robotics Research (ISRR), 2015. [PDF, arxiv, project page]

@inproceedings{sung2015_robobarista,

title={Robobarista: Object Part based Transfer of Manipulation Trajectories from Crowd-sourcing in 3D Pointclouds},

author={Jaeyong Sung and Seok H Jin and Ashutosh Saxena},

year={2015},

booktitle={International Symposium on Robotics Research (ISRR)}

}Abstract: There is a large variety of objects and appliances in human environments, such as stoves, coffee dispensers, juice extractors, and so on. It is challenging for a roboticist to program a robot for each of these object types and for each of their instantiations. In this work, we present a novel approach to manipulation planning based on the idea that many household objects share similarly-operated object parts. We formulate the manipulation planning as a structured prediction problem and design a deep learning model that can handle large noise in the manipulation demonstrations and learns features from three different modalities: point-clouds, language and trajectory. In order to collect a large number of manipulation demonstrations for different objects, we developed a new crowd-sourcing platform called Robobarista. We test our model on our dataset consisting of 116 objects with 249 parts along with 250 language instructions, for which there are 1225 crowd-sourced manipulation demonstrations. We further show that our robot can even manipulate objects it has never seen before.

-

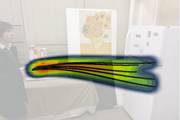

Car that Knows Before You Do: Anticipating Maneuvers via Learning Temporal Driving Models. Ashesh Jain, Hema S Koppula, Bharad Raghavan, and Ashutosh Saxena. In International Conference on Computer Vision (ICCV), 2015. [PDF, Arxiv, project page]

@inproceedings{jain2015_brain4cars,

title={Know Before You Do: Anticipating Maneuvers via Learning Temporal Driving Models},

author={Ashesh Jain and Hema S Koppula and Bharad Raghavan and Ashutosh Saxena},

year={2015},

booktitle={Cornell Tech Report}

}Abstract: Advanced Driver Assistance Systems (ADAS) have made driving safer over the last decade. They prepare vehicles for unsafe road conditions and alert drivers if they perform a dangerous maneuver. However, many accidents are unavoidable because by the time drivers are alerted, it is already too late. Anticipating maneuvers a few seconds beforehand can alert drivers before they perform the maneuver and also give ADAS more time to avoid or prepare for the danger. Anticipation requires modeling the driver’s action space, events inside the vehicle such as their head movements, and also the outside environment. Performing this joint modeling makes anticipation a challenging problem.

In this work we anticipate driving maneuvers a few seconds before they occur. For this purpose we equip a car with cameras and a computing device to capture the context from both inside and outside of the car. We represent the context with expressive features and propose an Autoregressive Input-Output HMM to model the contextual information. We evaluate our approach on a diverse data set with 1180 miles of natural freeway and city driving and show that we can anticipate maneuvers 3.5 seconds before they occur with over 80% F1-score. Our computation time during inference is under 3.6 milliseconds.

-

DeepMPC: Learning Deep Latent Features for Model Predictive Control. Ian Lenz, Ross Knepper, and Ashutosh Saxena. In Robotics Science and Systems (RSS), 2015. (full oral) [PDF, extended version PDF, project page]

@inproceedings{deepmpc-lenz-knepper-saxena-rss2015,

title={DeepMPC: Learning Deep Latent Features for Model Predictive Control},

author={Ian Lenz and Ross Knepper and Ashutosh Saxena},

year={2015},

booktitle={Robotics Science and Systems (RSS)}

}Abstract: Designing controllers for tasks with complex non-linear dynamics is extremely challenging, time-consuming, and in many cases, infeasible. This difficulty is exacerbated in tasks such as robotic food-cutting, in which dynamics might vary both with environmental properties, such as material and tool class, and with time while acting. In this work, we present DeepMPC, an online real-time model-predictive control approach designed to handle such difficult tasks. Rather than hand-design a dynamics model for the task, our approach uses a novel deep architecture and learning algorithm, learning controllers for complex tasks directly from data. We validate our method in experiments on a large-scale dataset of 1488 material cuts for 20 diverse classes, and in 450 real-world robotic experiments, demonstrating significant improvement over several other approaches.

-

rCRF: Recursive Belief Estimation over CRFs in RGB-D Activity Videos. Ozan Sener, Ashutosh Saxena. In Robotics Science and Systems (RSS), 2015. [PDF]

@inproceedings{rcrf-sener-saxena-rss2015,

title={rCRF: Recursive Belief Estimation over CRFs in RGB-D Activity Videos},

author={Ozan Sener and Ashutosh Saxena},

year={2015},

booktitle={Robotics Science and Systems (RSS)}

}Abstract: For assistive robots, anticipating the future actions of humans is an essential task. This requires modelling both the evolution of the activities over time and the rich relationships between humans and the objects. Since the future activities of humans are quite ambiguous, robots need to assess all the future possibilities in order to choose an appropriate action. Therefore, a successful anticipation algorithm needs to compute all plausible future activities and their corresponding probabilities.

In this paper, we address the problem of efficiently computing beliefs over future human activities from RGB-D videos. We present a new recursive algorithm that we call Recursive Conditional Random Field (rCRF) which can compute an accurate belief over a temporal CRF model. We use the rich modelling power of CRFs and describe a computationally tractable inference algorithm based on Bayesian filtering and structured diversity. In our experiments, we show that incorporating belief, computed via our approach, significantly outperforms the state-of-the-art methods, in terms of accuracy and computation time.

-

Environment-Driven Lexicon Induction for High-Level Instructions. Dipendra K Misra, Kejia Tao, Percy Liang, and Ashutosh Saxena. Association for Computational Linguistics (ACL), 2015. [PDF, supplementary material, project page]

@inproceedings{misra_acl2015_environmentdrivenlexicon,

title={Environment-Driven Lexicon Induction for High-Level Instructions},

author={Dipendra K Misra and Kejia Tao and Percy Liang and Ashutosh Saxena},

year={2015},

booktitle={Association for Computational Linguistics (ACL)}

}Abstract: We focus on the task of interpreting complex natural language instructions to a robot, in which we must ground high-level commands such as microwave the cup to low-level actions such as grasping. Previous approaches that learn a lexicon during training have inadequate coverage at test time, and pure search strategies cannot handle the exponential search space. We propose a new hybrid approach that leverages the environment to induce new lexical entries at test time, even for new verbs. Our semantic parsing model jointly reasons about the text, logical forms, and environment over multi-stage instruction sequences. We introduce a new dataset and show that our approach is able to successfully ground new verbs such as distribute, mix, arrange to complex logical forms, each containing up to four predicates.

-

Anticipating Human Activities using Object Affordances for Reactive Robotic Response, Hema S Koppula, Ashutosh Saxena. IEEE Transactions in Pattern Analysis and Machine Intelligence (PAMI), 2015. (Earlier best student paper award in RSS'13) [PDF, project page]

@inproceedings{koppula2015_anticipatingactivities,

title={Anticipating Human Activities using Object Affordances for Reactive Robotic Response},

author={Hema Koppula and Ashutosh Saxena},

year={2015},

booktitle={IEEE Trans PAMI}

}Abstract: An important aspect of human perception is anticipation, which we use extensively in our day-to-day activities when interacting with other humans as well as with our surroundings. Anticipating which activities will a human do next (and how) can enable an assistive robot to plan ahead for reactive responses. Furthermore, anticipation can even improve the detection accuracy of past activities. The challenge, however, is two-fold: We need to capture the rich context for modeling the activities and object affordances, and we need to anticipate the distribution over a large space of future human activities. In this work, we represent each possible future using an anticipatory temporal conditional random field (ATCRF) that models the rich spatial-temporal relations through object affordances. We then consider each ATCRF as a particle and represent the distribution over the potential futures using a set of particles. In extensive evaluation on CAD-120 human activity RGB-D dataset, we first show that anticipation improves the state-of-the-art detection results. We then show that for new subjects (not seen in the training set), we obtain an activity anticipation accuracy (defined as whether one of top three predictions actually happened) of 84.1%, 74.4% and 62.2% for an anticipation time of 1, 3 and 10 seconds respectively. Finally, we also show a robot using our algorithm for performing a few reactive responses.

-

Deep Learning for Detecting Robotic Grasps, Ian Lenz, Honglak Lee, Ashutosh Saxena. International Journal on Robotics Research (IJRR), 2015. [IJRR link, PDF, more]

@article{lenz2015_deeplearning_roboticgrasp_ijrr,

title={Deep Learning for Detecting Robotic Grasps},

author={Ian Lenz and Honglak Lee and Ashutosh Saxena},

year={2015},

journal={IJRR}

}Abstract: We consider the problem of detecting robotic grasps in an RGBD view of a scene containing objects. In this work, we apply a deep learning approach to solve this problem, which avoids time-consuming hand-design of features. This presents two main challenges. First, we need to evaluate a huge number of candidate grasps. In order to make detection fast and robust, we present a two-step cascaded system with two deep networks, where the top detections from the first are re-evaluated by the second. The first network has fewer features, is faster to run, and can effectively prune out unlikely candidate grasps. The second, with more features, is slower but has to run only on the top few detections. Second, we need to handle multimodal inputs effectively, for which we present a method that applies structured regularization on the weights based on multimodal group regularization. We show that our method improves performance on an RGBD robotic grasping dataset, and can be used to successfully execute grasps on two different robotic platforms.

(An earlier version was presented in Robotics Science and Systems (RSS) 2013.)

-

Learning Preferences for Manipulation Tasks from Online Coactive Feedback, Ashesh Jain, Shikhar Sharma, Thorsten Joachims, Ashutosh Saxena. In International Journal of Robotics Research (IJRR), 2015. [PDF, project+video]

@inproceedings{jainsaxena2015_learningpreferencesmanipulation,

title="Learning Preferences for Manipulation Tasks from Online Coactive Feedback",

author="Ashesh Jain and Shikhar Sharma and Thorsten Joachims and Ashutosh Saxena",

year="2015",

booktitle="International Journal of Robotics Research (IJRR)",

}Abstract: We consider the problem of learning preferences over trajectories for mobile manipulators such as personal robots and assembly line robots. The preferences we learn are more intricate than simple geometric constraints on trajectories; they are rather governed by the surrounding context of various objects and human interactions in the environment. We propose a coactive online learning framework for teaching preferences in contextually rich environments. The key novelty of our approach lies in the type of feedback expected from the user: the human user does not need to demonstrate optimal trajectories as training data, but merely needs to iteratively provide trajectories that slightly improve over the trajectory currently proposed by the system. We argue that this coactive preference feedback can be more easily elicited than demonstrations of optimal trajectories. Nevertheless, theoretical regret bounds of our algorithm match the asymptotic rates of optimal trajectory algorithms. We implement our algorithm on two high-degree-of-freedom robots, PR2 and Baxter, and present three intuitive mechanisms for providing such incremental feedback. In our experimental evaluation we consider two context rich settings, household chores and grocery store checkout, and show that users are able to train the robot with just a few feedbacks (taking only a few minutes).

Earlier version of this work was presented at the NIPS'13 and ISRR'13.

-

Modeling 3D Environments through Hidden Human Context. Yun Jiang, Hema S Koppula, Ashutosh Saxena. IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), 2015. [PDF]

@article{jiang-modeling3denvironemnts-hiddenhumans-2015,

title={Modeling 3D Environments through Hidden Human Context},

author={Yun Jiang and Hema S Koppula and Ashutosh Saxena},

year={2015},

journal={Tech Report}

}Abstract: The idea of modeling object-object relations has been widely leveraged in many scene understanding applications. However, as the objects are designed by humans and for human usage, when we reason about a human environment, we reason about it through an interplay between the environment, objects and humans. In this paper, we model environments not only through objects, but also through latent human poses and human-object interactions.

In order to handle the large number of latent human poses and a large variety of their interactions with objects, we present Infinite Latent Conditional Random Field (ILCRF) that models a scene as a mixture of CRFs generated from Dirichlet processes. In each CRF, we model objects and object-object relations as existing nodes and edges, and hidden human poses and human-object relations as latent nodes and edges. ILCRF generatively models the distribution of different CRF structures over these latent nodes and edges. We apply the model to the challenging applications of 3D scene labeling and robotic scene arrangement. In extensive experiments, we show that our model significantly outperforms the state-of-the-art results in both applications. We further use our algorithm on a robot for arranging objects in a new scene using the two applications aforementioned.

Parts of this journal submission have been published as the following conference papers: ICML'12 (scene arrangement), ISER'12 (robotics scene arrangement), CVPR'13 (oral, 3D scene labeling), and RSS'13 (ILCRF algorithm for scene arrangement)

-

Watch-n-Patch: Unsupervised Understanding of Actions and Relations, Chenxia Wu, Jiemi Zhang, Silvio Savarese, Ashutosh Saxena. In Computer Vision and Pattern Recognition (CVPR), 2015. [PDF, journal version, project page]

@article{chenxiawu_watchnpatch_2015,

title={Watch-n-Patch: Unsupervised Understanding of Actions and Relations},

author={Chenxia Wu and Jiemi Zhang and Silvio Savarese and Ashutosh Saxena},

year={2015},

booktitle={Computer Vision and Pattern Recognition (CVPR)}

}We focus on modeling human activities comprising multiple actions in a completely unsupervised setting. Our model learns the high-level action co-occurrence and temporal relations between the actions in the activity video. We consider the video as a sequence of short-term action clips, called action-words, and an activity is about a set of action-topics indicating which actions are present in the video. Then we propose a new probabilistic model relating the action-words and the action-topics. It allows us to model long-range action relations that commonly exist in the complex activity, which is challenging to capture in the previous works.

We apply our model to unsupervised action segmentation and recognition, and also to a novel application that detects forgotten actions, which we call action patching. For evaluation, we also contribute a new challenging RGB-D activity video dataset recorded by the new Kinect v2, which contains several human daily activities as compositions of multiple actions interacted with different objects. The extensive experiments show the effectiveness of our model.

-

PlanIt: A Crowdsourcing Approach for Learning to Plan Paths from Large Scale Preference Feedback. Ashesh Jain, Debarghya Das, Ashutosh Saxena. In International Conference on Robotics and Automation (ICRA), 2015. [PDF, PlanIt website]

@inproceedings{planit-jain-das-saxena-2014,

title={PlanIt: A Crowdsourcing Approach for Learning to Plan Paths from Large Scale Preference Feedback},

author={Ashesh Jain and Debarghya Das and Ashutosh Saxena},

year={2015},

booktitle={ICRA}

}Abstract: We consider the problem of learning user preferences over robot trajectories in environments rich in objects and humans. This is challenging because the criterion defining a good trajectory varies with users, tasks and interactions in the environments. We use a cost function to represent how preferred the trajectory is; the robot uses this cost function to generate a trajectory in a new environment. In order to learn this cost function, we design a system - PlanIt, where non-expert users can see robots motion for different asks and label segments of the video as good/bad/neutral. Using this weak, noisy labels, we learn the parameters of our model. Our model is a generative one, where the preferences are expressed as function of grounded object affordances. We test our approach on 112 different environments, and our extensive experiments show that we can learn meaningful preferences in the form of grounded planning affordances, and then use them to generate preferred trajectories in human environments.

-

Robo Brain: Large-Scale Knowledge Engine for Robots, Ashutosh Saxena, Ashesh Jain, Ozan Sener, Aditya Jami, Dipendra K Misra, Hema S Koppula. International Symposium on Robotics Research (ISRR), 2015. [PDF, arxiv, project page] (Earlier Cornell Tech Report, Aug 2014.)

@article{saxena_robobrain2014,

title={Robo Brain: Large-Scale Knowledge Engine for Robots},

author={Ashutosh Saxena and Ashesh Jain and Ozan Sener and Aditya Jami and Dipendra K Misra and Hema S Koppula},

year={2015},

journal={International Symposium on Robotics Research (ISRR)}

}Abstract: In this paper we introduce a knowledge engine, which learns and shares knowledge representations, for robots to carry out a variety of tasks. Building such an engine brings with it the challenge of dealing with multiple data modalities including symbols, natural language, haptic senses, robot trajectories, visual features and many others. The knowledge stored in the engine comes from multiple sources including physical interactions that robots have while performing tasks (perception, planning and control), knowledge bases from the Internet and learned representations from several robotics research groups.

We discuss various technical aspects and associated challenges such as modeling the correctness of knowledge, inferring latent information and formulating different robotic tasks as queries to the knowledge engine. We describe the system architecture and how it supports different mechanisms for users and robots to interact with the engine. Finally, we demonstrate its use in three important research areas: grounding natural language, perception, and planning, which are the key building blocks for many robotic tasks. This knowledge engine is a collaborative effort and we call it RoboBrain: http://www.robobrain.me

-

Tell Me Dave: Context-Sensitive Grounding of Natural Language to Mobile Manipulation Instructions, Dipendra K Misra, Jaeyong Sung, Kevin Lee, Ashutosh Saxena. In Robotics: Science and Systems (RSS), 2014. [PDF, project+video]

@inproceedings{misra_sung_lee_saxena_rss2014_groundingnlp,

title="Tell Me Dave: Context-Sensitive Grounding of Natural Language to Mobile Manipulation Instructions",

author="Dipendra K Misra and Jaeyong Sung and Kevin Lee and Ashutosh Saxena",

year="2014",

booktitle="Robotics: Science and Systems (RSS)",

}Abstract: We consider performing a sequence of mobile manipulation tasks with instructions given in natural language (NL). Given a new environment, even a simple task such as of boiling water would be performed quite differently depending on the presence, location and state of the objects. We start by collecting a dataset of task descriptions in free-form natural language and the corresponding grounded task-logs of the tasks performed in a robot simulator. We then build a library of verb-environment-instructions that represent possible instructions for each verb in that environment---these may or may not be valid for a different environment and task context.

We present a model that takes into account the variations in natural language, and ambiguities in grounding them to robotic instructions with appropriate environment context and task constraints. Our model also handles incomplete or noisy NL instructions. Our model is based on an energy function that encodes such properties in a form isomorphic to a conditional random field. In evaluation, we show that our model produces sequences that perform the task successfully in a simulator and also significantly outperforms the state-of-the-art. We also demonstrate that our output instruction sequences being performed on a PR2 robot.

-

Learning Haptic Representation for Manipulating Deformable Food Objects. Mevlana Gemici, Ashutosh Saxena. In International Conference on Intelligent Robotics and Systems (IROS), 2014. (best cognitive robotics paper award) [PDF, video]

@inproceedings{gemici-saxena-learninghaptic_food_2014,

title={Learning Haptic Representation for Manipulating Deformable Food Objects},

author={Mevlana Gemici and Ashutosh Saxena},

year={2014},

booktitle={IROS}

}Abstract: Manipulation of complex deformable semi-solids such as food objects is an important skill for personal robots to have. In this work, our goal is to model and learn the physical properties of such objects. We design actions involving use of tools such as forks and knives that obtain haptic data containing information about the physical properties of the object. We then design appropriate features and use supervised learning to map these features to certain physical properties (hardness, plasticity, elasticity, tensile strength, brittleness, adhesiveness). Additionally, we present a method to compactly represent the robot's beliefs about the object's properties using a generative model, which we use to plan appropriate manipulation actions. We extensively evaluate our approach on a dataset including haptic data from 12 categories of food (including categories not seen before by the robot) obtained in 941 experiments. Our robot prepared a salad during 60 sequential robotic experiments where it made a mistake in only 4 instances.

-

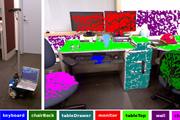

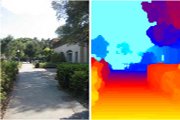

Hierarchical Semantic Labeling for Task-Relevant RGB-D Perception, Chenxia Wu, Ian Lenz, Ashutosh Saxena. In Robotics: Science and Systems (RSS), 2014. [PDF, webpage]

@inproceedings{wulenzsaxena2014_hierarchicalrgbdlabeling,

title="Hierarchical Semantic Labeling for Task-Relevant RGB-D Perception",

author="Chenxia Wu and Ian Lenz and Ashutosh Saxena",

year="2014",

booktitle="Robotics: Science and Systems (RSS)",

}Abstract: Semantic labeling of RGB-D scenes is very important in enabling robots to perform mobile manipulation tasks, but different tasks may require entirely different sets of labels. For example, when navigating to an object, we may need only a single label denoting its class, but to manipulate it, we might need to identify individual parts. In this work, we present an algorithm that produces hierarchical labelings of a scene, following is-part-of and is-type-of relationships. Our model is based on a Conditional Random Field that relates pixel-wise and pair-wise observations to labels. We encode hierarchical labeling constraints into the model while keeping inference tractable. Our model thus predicts different specificities in labeling based on its confidence---if it is not sure whether an object is Pepsi or Sprite, it will predict soda rather than making an arbitrary choice. In extensive experiments, both offline on standard datasets as well as in online robotic experiments, we show that our model outperforms other state-of-the-art methods in labeling performance as well as in success rate for robotic tasks.

-

Modeling High-Dimensional Humans for Activity Anticipation using Gaussian Process Latent CRFs, Yun Jiang, Ashutosh Saxena. In Robotics: Science and Systems (RSS), 2014. [PDF]

@inproceedings{jiangsaxena2014_humanmodeling-gplcrf,

title="Modeling High-Dimensional Humans for Activity Anticipation using Gaussian Process Latent CRFs",

author="Yun Jiang and Ashutosh Saxena",

year="2014",

booktitle="Robotics: Science and Systems (RSS)",

}Abstract: For robots, the ability to model human configurations and temporal dynamics is crucial for the task of anticipating future human activities, yet requires conflicting properties: On one hand, we need a detailed high-dimensional description of human configurations to reason about the physical plausibility of the prediction; on the other hand, we need a compact representation to be able to parsimoniously model the relations between the human and the environment.

We therefore propose a new model, GP-LCRF, which admits both the high-dimensional and low-dimensional representation of humans. It assumes that the high-dimensional representation is generated from a latent variable corresponding to its low-dimensional representation using a Gaussian process. The generative process not only defines the mapping function between the high- and low-dimensional spaces, but also models a distribution of humans embedded as a potential function in GP-LCRF along with other potentials to jointly model the rich context among humans, objects and the activity. Through extensive experiments on activity anticipation, we show that our GP-LCRF consistently outperforms the state-of-the-art results and reduces the predicted human trajectory error by 11.6%.

-

Physically-Grounded Spatio-Temporal Object Affordances. Hema S Koppula, Ashutosh Saxena. In European Conference on Computer Vision (ECCV), 2014. [PDF, webpage]

@article{koppula-anticipatoryplanning-iser2014,

title={Physically-Grounded Spatio-Temporal Object Affordances},

author={Hema Koppula and Ashutosh Saxena},

year={2014},

journal={ECCV}

}Abstract: Objects in human environments support various functionalities which govern how people interact with their environments in order to perform tasks. In this work, we discuss how to represent and learn a functional understanding of an environment in terms of object affordances. Such an understanding is useful for many applications such as activity detection and assistive robotics. Starting with a semantic notion of affordances, we present a generative model that takes a given environment and human intention into account, and grounds the affordances in the form of spatial locations on the object and temporal trajectories in the 3D environment. The probabilistic model also allows uncertainties and variations in the grounded affordances. We apply our approach on RGB-D videos from Cornell Activity Dataset, where we first show that we can successfully ground the affordances, and we then show that learning such affordances improves performance in the labeling tasks.

-

Anticipatory Planning for Human-Robot Teams. Hema S Koppula, Ashesh Jain, Ashutosh Saxena. In 14th International Symposium on Experimental Robotics (ISER), 2014. [PDF, webpage]

@article{koppula-anticipatoryplanning-iser2014,

title={Anticipatory Planning for Human-Robot Teams},

author={Hema Koppula and Ashesh Jain and Ashutosh Saxena},

year={2014},

journal={ISER}

}Abstract: When robots work alongside humans for performing collaborative tasks, they need to be able to anticipate human's future actions and plan appropriate actions. The tasks we consider are performed in contextually-rich environments containing objects, and there is a large variation in the way humans perform these tasks. We use a graphical model to represent the state-space, where we model the humans through their low-level kinematics as well as their high-level intent, and model their interactions with the objects through physically-grounded object affordances. This allows our model to anticipate a belief about possible future human actions, and we model the human's and robot's behavior through an MDP in this rich state-space. We further discuss that due to perception errors and the limitations of the model, the human may not take the optimal action and therefore we present robot's anticipatory planning with different behaviors of the human within the model's scope. In experiments on Cornell Activity Dataset, we show that our method performs better than various baselines for collaborative planning.

-

3D Reasoning from Blocks to Stability. Zhaoyin Jia, Andy Gallagher, Ashutosh Saxena, Tsuhan Chen. In IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), 2014. [PDF]

@article{jia-3d-stability-pami,

title={3D Reasoning from Blocks to Stability},

author={Zhaoyin Jia and Andy Gallagher and Ashutosh Saxena and Tsuhan Chen},

year={2014},

journal={IEEE Trans PAMI}

}Abstract: Objects occupy physical space and obey physical laws. To truly understand a scene, we must reason about the space that objects in it occupy, and how each objects is supported stably by each other. In other words, we seek to understand which objects would, if moved, cause other objects to fall. This 3D volumetric reasoning is important for many scene understanding tasks, ranging from segmentation of objects to perception of a rich 3D, physically well-founded, interpretations of the scene. In this paper, we propose a new algorithm to parse a single RGB-D image with 3D block units while jointly reasoning about the segments, volumes, supporting relationships and object stability. Our algorithm is based on the intuition that a good 3D representation of the scene is one that fits the depth data well, and is a stable, self-supporting arrangement of objects (i.e., one that does not topple). We design an energy function for representing the quality of the block representation based on these properties. Our algorithm fits 3D blocks to the depth values corresponding to image segments, and iteratively optimizes the energy function. Our proposed algorithm is the first to consider stability of objects in complex arrangements for reasoning about the underlying structure of the scene. Experimental results show that our stability-reasoning framework improves RGB-D segmentation and scene volumetric representation.

-

Synthesizing Manipulation Sequences for Under-Specified Tasks using Unrolled Markov Random Fields. Jaeyong Sung, Bart Selman, Ashutosh Saxena. In International Conference on Intelligent Robotics and Systems (IROS), 2014. [PDF]

@inproceedings{sung-selman-saxena-learningsequenceofcontrollers_2014,

title={Synthesizing Manipulation Sequences for Under-Specified Tasks using Unrolled Markov Random Fields},

author={Jaeyong Sung and Bart Selman and Ashutosh Saxena},

year={2014},

booktitle={IROS}

}Abstract: Many tasks in human environments require performing a sequence of navigation and manipulation steps involving objects. In unstructured human environments, the location and configuration of the objects involved often change in unpredictable ways. This requires a high-level planning strategy that is robust and flexible in an uncertain environment. We propose a novel dynamic planning strategy, which can be trained from a set of example sequences. High level tasks are expressed as a sequence of primitive actions or controllers (with appropriate parameters). Our score function, based on Markov Random Field (MRF), captures the relations between environment, controllers, and their arguments. By expressing the environment using sets of attributes, the approach generalizes well to unseen scenarios. We train the parameters of our MRF using a maximum margin learning method. We provide a detailed empirical validation of our overall framework demonstrating successful plan strategies for a variety of tasks.

-

Special issue on autonomous grasping and manipulation. Heni Ben Amor, Ashutosh Saxena, Nicolas Hudson, Jan Peters. Autonomous Robots, Volume 36, Issue 1-2, January 2014. [PDF, Special issue link]

@book{specialissuemanipulation2013,

title="Special issue on autonomous grasping and manipulation",

editor="Heni Ben Amor and Ashutosh Saxena and Nicolas Hudson and Jan Peters",

year="2014",

publisher="Springer: Autonomous Robots"

} -

Learning Trajectory Preferences for Manipulators via Iterative Improvement, Ashesh Jain, Brian Wojcik, Thorsten Joachims, Ashutosh Saxena. In Neural Information Processing Systems (NIPS), 2013. [PDF, project+video]

@inproceedings{jainsaxena2013_trajectorypreferences,

title="Learning Trajectory Preferences for Manipulators via Iterative Improvement",

author="Ashesh Jain and Brian Wojcik and Thorsten Joachims and Ashutosh Saxena",

year="2013",

booktitle="Neural Information Processing Systems (NIPS)",

}Abstract: We consider the problem of learning good trajectories for manipulation tasks. This is challenging because the criterion defining a good trajectory varies with users, tasks and environments. In this paper, we propose a co-active online learning framework for teaching robots the preferences of its users for object manipulation tasks. The key novelty of our approach lies in the type of feedback expected from the user: the human user does not need to demonstrate optimal trajectories as training data, but merely needs to iteratively provide trajectories that slightly improve over the trajectory currently proposed by the system. We argue that this co-active preference feedback can be more easily elicited from the user than demonstrations of optimal trajectories, which are often challenging and non-intuitive to provide on high degrees of freedom manipulators. Nevertheless, theoretical regret bounds of our algorithm match the asymptotic rates of optimal trajectory algorithms. We demonstrate the generalizability of our algorithm on a variety of grocery checkout tasks, for whom, the preferences were not only influenced by the object being manipulated but also by the surrounding environment.

An earlier version of this work was presented at the ICML workshop on Robot Learning, June 2013. [PDF]

-

Anticipating Human Activities using Object Affordances for Reactive Robotic Response, Hema S Koppula, Ashutosh Saxena. In Robotics: Science and Systems (RSS), 2013. (best student paper award, best paper runner-up) [PDF, project page]

@inproceedings{koppula2013_anticipatingactivities,

title={Anticipating Human Activities using Object Affordances for Reactive Robotic Response},

author={Hema Koppula and Ashutosh Saxena},

year={2013},

booktitle={RSS}

}Abstract: Anticipating which activities will a human do next (and how) can enable an assistive robot to plan ahead for reactive responses in human environments. The challenge, however, is two-fold: We need to capture the rich context for modeling the activities and object affordances, and we need to anticipate the distribution over a large space of future human activities. In this work, we represent each possible future using an anticipatory temporal conditional random field (ATCRF) that models the rich spatial-temporal relations through object affordances. We then consider each ATCRF as a particle and represent the distribution over the potential futures using a set of particles.

-

Infinite Latent Conditional Random Fields for Modeling Environments through Humans, Yun Jiang, Ashutosh Saxena. In Robotics: Science and Systems (RSS), 2013. [PDF, project page]

@inproceedings{jiang_2013_ilcrf_modelingenvironment_humans,

title={Infinite Latent Conditional Random Fields for Modeling Environments through Humans},

author={Yun Jiang and Ashutosh Saxena},

year={2013},

booktitle={RSS}

}Abstract: In this paper, we model environments not only through objects, but also through latent human poses and human-object interactions. However, the number of potential human poses is large and unknown, and the human-object interactions vary not only in type but also in which human pose relates to each object. In order to handle such properties, we present Infinite Latent Conditional Random Fields (ILCRFs) that model a scene as a mixture of CRFs generated from Dirichlet processes. Each CRF represents one possible explanation of the scene. In addition to visible object nodes and edges, it generatively models the distribution of different CRF structures over the latent human nodes and corresponding edges.

(Full journal version, under submission: Modeling 3D Environments through Hidden Human Context, 2014.)

-

Deep Learning for Detecting Robotic Grasps, Ian Lenz, Honglak Lee, Ashutosh Saxena. In Robotics: Science and Systems (RSS), 2013. [PDF, arXiv, more]

@inproceedings{lenz2013_deeplearning_roboticgrasp,

title={Deep Learning for Detecting Robotic Grasps},

author={Ian Lenz and Honglak Lee and Ashutosh Saxena},

year={2013},

booktitle={RSS}

}Abstract: We consider the problem of detecting robotic grasps in an RGB-D view of a scene containing objects. In this work, we apply a deep learning approach to solve this problem, which avoids time-consuming hand-design of features. One challenge is that we need to handle multimodal inputs well, for which we present a method to apply structured regularization on the weights based on multimodal group regularization. We demonstrate that our method outperforms the previous state-of-the-art methods in robotic grasp detection, and can be used to successfully execute grasps on a Baxter robot.

(An earlier version was presented in International Conference on Learning Representations (ICLR), 2013.)

-

Learning Spatio-Temporal Structure from RGB-D Videos for Human Activity Detection and Anticipation, Hema S. Koppula, Ashutosh Saxena. In International Conference on Machine Learning (ICML), 2013. [PDF, project page]

@inproceedings{koppula-icml2013-learninggraphs-activities,

title={Learning Spatio-Temporal Structure from RGB-D Videos for Human Activity Detection and Anticipation},

author={Hema S. Koppula and Ashutosh Saxena},

year={2013},

booktitle={ICML}

}Abstract: We consider the problem of detecting past activities as well as anticipating which activity will happen in the future and how. We start by modeling the rich spatio-temporal relations between human poses and objects (called affordances) using a conditional random field (CRF). However, because of the ambiguity in the temporal segmentation of the sub-activities that constitute an activity, in the past as well as in the future, multiple graph structures are possible. In this paper, we reason about these alternate possibilities by reasoning over multiple possible graph structures. We obtain them by approximating the graph with only additive features, which lends to efficient dynamic programming. Starting with this proposal graph structure, we then design moves to obtain several other likely graph structures. We then show that our approach improves the state-of-the-art significantly for detecting past activities as well as for anticipating future activities.

-

Hallucinated Humans as the Hidden Context for Labeling 3D Scenes, Yun Jiang, Hema S Koppula, Ashutosh Saxena. In Computer Vision and Pattern Recognition (CVPR), 2013 (oral). [PDF, project page]

@inproceedings{jiang-hallucinatinghumans-labeling3dscenes-cvpr2013,

title={Hallucinated Humans as the Hidden Context for Labeling 3D Scenes},

author={Yun Jiang and Hema Koppula and Ashutosh Saxena},

year={2013},

booktitle={CVPR}

}Abstract: For scene understanding, one popular approach has been to model the object-object relationships. In this paper, we hypothesize that such relationships are only an artifact of certain hidden factors, such as humans. For example, the objects, monitor and keyboard, are strongly spatially correlated only because a human types on the keyboard while watching the monitor. Our goal is to learn this hidden human context (i.e., the human-object relationships), and also use it as a cue for labeling the scenes. We present Infinite Factored Topic Model (IFTM), where we consider a scene as being generated from two types of topics: human configurations and human-object relationships. This enables our algorithm to hallucinate the possible configurations of the humans in the scene parsimoniously.

(Full journal version, under submission: Modeling 3D Environments through Hidden Human Context, 2014.)

-