ConvNetJS

Deep Learning in your browser

Vols

The entire library is based around transforming 3-dimensional volumes of numbers. These volumes are stored in the Vol class, which is at the heart of the library. The Vol class is a wrapper around:- a 1-dimensional list of numbers (the activations, in field .w)

- their gradients (field .dw)

- and lastly contains three dimensions (fields .sx, .sy, .depth).

// create a Vol of size 32x32x3, and filled with random numbers var v = new convnetjs.Vol(32, 32, 3); var v = new convnetjs.Vol(32, 32, 3, 0.0); // same volume but init with zeros var v = new convnetjs.Vol(1, 1, 3); // a 1x1x3 Vol with random numbers // you can also initialize with a specific list. E.g. create a 1x1x3 Vol: var v = new convnetjs.Vol([1.2, 3.5, 3.6]); // the Vol is a wrapper around two lists: .w and .dw, which both have // sx * sy * depth number of elements. E.g: v.w[0] // contains 1.2 v.dw[0] // contains 0, because gradients are initialized with zeros // you can also access the 3-D Vols with getters and setters // but these are subject to function call overhead var vol3d = new convnetjs.Vol(10, 10, 5); vol3d.set(2,0,1,5.0); // set coordinate (2,0,1) to 5.0 vol3d.get(2,0,1) // returns 5.0

Net

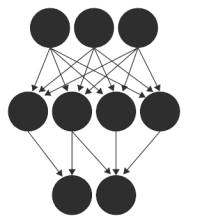

A Net is a very simple class that simply contains a list of Layers (discussed below). When an example (in form of a Vol) is passed through the Net, the Net simply iterates through all of its layers and propagates the example through each one in turn, and returns the result of the last layer. Similarly, during backpropagation the Net calls the backward() function of each layer in turn to compute the gradient.

Layers

As mentioned, every Network is just a linear list of layers. Your first layer must be 'input' (in which you declare sizes of your input), your last layer must be a loss layer ('softmax' or 'svm' for classification, or 'regression' for regression). Every layer takes an input Vol and produces a new output Vol, which is why I prefer to refer to them as transformers.

Before going into details of the types of available layers, lets look at an example at this point that ties these concepts together in a concrete form:

var layer_defs = [];

// minimal network: a simple binary SVM classifer in 2-dimensional space

layer_defs.push({type:'input', out_sx:1, out_sy:1, out_depth:2});

layer_defs.push({type:'svm', num_classes:2});

// create a net

var net = new convnetjs.Net();

net.makeLayers(layer_defs);

// create a 1x1x2 volume of input activations:

var x = new convnetjs.Vol(1,1,2);

x.w[0] = 0.5; // w is the field holding the actual data

x.w[1] = -1.3;

// a shortcut for the above is var x = new convnetjs.Vol([0.5, -1.3]);

var scores = net.forward(x); // pass forward through network

// scores is now a Vol() of output activations

console.log('score for class 0 is assigned:' + scores.w[0]);

Generic Neural Network Layers

Lets now step through the details of the Layers:

{type:'input', out_sx:1, out_sy:1, out_depth:20} // declares 20-dimensional input points

{type:'input', out_sx:24, out_sy:24, out_depth:3} // input is 24x24 RGB image

// create layer of 10 linear neurons (no activation function by default)

{type:'fc', num_neurons:10}

// create layer of 10 neurons that use sigmoid activation function

{type:'fc', num_neurons:10, activation:'sigmoid'} // x->1/(1+e^(-x))

{type:'fc', num_neurons:10, activation:'tanh'} // x->tanh(x)

{type:'fc', num_neurons:10, activation:'relu'} // rectified linear units: x->max(0,x)

// maxout units: (x,y)->max(x,y). num_neurons must be divisible by 2.

// maxout "consumes" multiple filters for every output. Thus, this line

// will actually produce only 5 outputs in this layer. (group_size is 2)

// by default.

{type:'fc', num_neurons:10, activation:'maxout'}

// specify group size in maxout. num_neurons must be divisible by group_size.

// here, output will be 3 neurons only (3 = 12/4)

{type:'fc', num_neurons:12, group_size: 4, activation:'maxout'}

// dropout half the units (probability 0.5) in this layer during training, for regularization

{type:'fc', num_neurons:10, activation:'relu', drop_prob: 0.5}

layer_defs.push({type:'softmax', num_classes:2});

layer_defs.push({type:'svm', num_classes:2});

When you are training a classifier layer, your classes must be numbers that begin at 0. For a binary problem, these would be class 0 and class 1. For K classes, the classes are 0..K-1.

// train 3 real-valued outputs

layer_defs.push({type:'regression', num_neurons: 3});

When you are training a regression layer, you must pass the trainer a list of target values.

Layers for Convolutional Networks

If you are training Convolutional Neural Networks (on images, presumably), these layers will be useful:

W2 = (W1 - sx + pad*2)/stride + 1

H2 = (H1 - sy + pad*2)/stride + 1

D2 = filters

Every filter slides spatially along all x,y positions in the input volume computing a single activation map, a sheet of activations in the output volume. Note that he extent along the "depth" direction (the 3rd direction) is always the entire input depth, while the connectivity is restricted spatially. Lets see a few examples:

// 8 5x5 filters will be convolved with the input

{type:'conv', sx:5, filters:8, stride:1, activation:'relu'}

// 10 3x3 filters will be convolved with the input. Padding of 1 applied automatically

{type:'conv', sx:3, pad:1, filters:10, stride:1, activation:'relu'}

// 10 3x4 filters will be applied (height of filter is different here)

{type:'conv', sx:3, sy: 4, pad:1, filters:10, stride:1, activation:'relu'}

// first layer of a Krizhevsky et al (2012) net.

// On input of size 227x227x3 gives volume 55x55x96

{type:'conv', sx:11, filters:96, stride:4, activation:'relu'}

W2 = (W1 - sx + pad*2)/stride + 1

H2 = (H1 - sx + pad*2)/stride + 1

D2 = D1

In particular note that the output depth is equal to input depth, because the max pooling happens in every slice of the input volume (activation maps) independently. The parameters passed to pooling layer are exactly same as to the convolutional layer (but of course, without 'activation'):

// perform max pooling in 2x2 non-overlapping neighborhoods

{type:'pool', sx:2, stride:2}

{type:'lrn', k:1, n:3, alpha:0.1, beta:0.75}

Trainers

So, we've seen how to define a network. Lets see how we actually train one. We will need the Trainer class. The Trainer class takes a network and parameters, and then you pass it examples and the associated correct labels (class labels, or target values in regression). The trainer passes the examples through the network, sees the network predictions, and then adjusts the network weights to make the provided correct labels more likely for that particular input in the future. If you do this many, times for your entire dataset in turns, it will over time transform the network to map all inputs to correct outputs!

// example SGD+Momentum trainer. Performs a weight update every 10 examples

var trainer = new convnetjs.Trainer(net, {method: 'sgd', learning_rate: 0.01,

l2_decay: 0.001, momentum: 0.9, batch_size: 10,

l1_decay: 0.001});

// example that uses adadelta. Reasonable for beginners.

var trainer = new convnetjs.Trainer(net, {method: 'adadelta', l2_decay: 0.001,

batch_size: 10});

// example adagrad.

var trainer = new convnetjs.Trainer(net, {method: 'adagrad', l2_decay: 0.001,

l1_decay: 0.001, batch_size: 10});

The three main options for a trainer are specified with 'method' and can be 'sgd'/'adagrad'/'adadelta'. The equations for these can be seen in this paper. Also relevant, here is a ConvNetJS demo comparing these on MNIST. Here are some tips:

- If you are a newbie, I'd recommend using Adadelta or then Adagrad. They automatically adapt the learning rate and do it relatively reasonably.

- If you use SGD, you almost always want to use a non-zero momentum. 0.9 is often used for momentum. You need to play with the learning rate a bit: if it's too high, your network will never converge at best, and will die catastrophically at worst, especially if you use ReLU activations. But if it's too low, the network will take very long to train. You need to monitor the cost of the training (as explained below)

- You basically always want to use a non-zero l2_decay. If it's too high, the network will be regularized very strongly. This might be a good idea if you have very few training data. If your training error is also very low (so your network is crushing the training set perfectly), you may want to increase this a bit to have better generalization. If your training error is very high (so the network is struggling to learn your data), you may want to try to decrease it.

- Use l1_decay instead of l2_decay if you'd like your network to have sparse weights at the end, as l1 norm on weights is sparsity encouraging. If you have no idea what I'm talking about, don't touch l1_decay and leave it at 0 (default).

- Usually you want to use batch_size of 1. This basically controls how accurate the gradient steps of your network will be. If you let the network see 100 examples in a batch, it will be able to estimate a much better value for gradient before it actually takes the step. However, in practice a value of 1 (and having an appropriately small learning rate) is probably the best way to go.

- Q: I don't care about anything, just tell me what to use. A: okay, the 2nd example above (with 'adadelta') is probably good to try.

Lets see examples of actually training:

// the trainer takes Vol() inputs.

var x = new convnetjs.Vol(1,1,d);

x.w[0] = 1; // set first feature to 1. example

// if your loss on top is

// layer_defs.push({type:'svm', num_classes: 5});

// use something like... (lets say x is class 3)

var stats = trainer.train(x, 3);

// if your loss on top is

// layer_defs.push({type:'regression', num_neurons: 1});

// use something like... (note the LIST!)

var stats = trainer.train(x, [0.7]);

// if your loss on top is

// layer_defs.push({type:'regression', num_neurons: 3});

// use something like

var stats = trainer.train(x, [0.7, 0.1, 0.3]);

The returned object stats contains the cost (which you want to be decrease over time as you train, that's good). It also returns other things such as the time to propagate forward and backwards. Here's a last, little more realistic example of a training loop:

var trainer = new convnetjs.SGDTrainer(net, {learning_rate:0.01,

momentum:0.9, batch_size:16, l2_decay:0.001});

for(var i=0;i<my_data.length;i++) {

var x = new convnetjs.Vol(1,1,2,0.0); // a 1x1x2 volume initialized to 0's.

x.w[0] = my_data[i][0]; // Vol.w is just a list, it holds your data

x.w[1] = my_data[i][1];

trainer.train(x, my_labels[i]);

}

Saving and Loading Networks with JSON

Simply put, use the toJSON() and fromJSON() functions. For example to save and load a net:

// network outputs all of its parameters into json object var json = net.toJSON(); // the entire object is now simply string. You can save this somewhere var str = JSON.stringify(json); // later, to recreate the network: var json = JSON.parse(str); // creates json object out of a string var net2 = new convnetjs.Net(); // create an empty network net2.fromJSON(json); // load all parameters from JSON

After executing the last line, net2 should be an exact duplicate of the original net.

Utilities and Visualizations

See github repo for modules (vis and util inside build/) that do small useful things, like plot graphs and so on. See demos for examples on how to use this functionality for now.

Reinforcement Learning

There is a module available to do Reinforcement Learning using Deep Q Learning. This allows you to create a deeqlearn.Brain() instance that takes states and rewards over time and produces a discrete action. Over time, the brain automagically adjusts its weights to perform actions that maximize the expected, time-discounted reward.

For example, lets train an agent that observes 3-dimensional states and is asked to do one of two actions. Lets reward the agent only for action 0 for sake of very simple example:

var brain = new deepqlearn.Brain(3, 2); // 3 inputs, 2 possible outputs (0,1)

var state = [Math.random(), Math.random(), Math.random()];

for(var k=0;k<10000;k++) {

var action = brain.forward(state); // returns index of chosen action

var reward = action === 0 ? 1.0 : 0.0;

brain.backward([reward]); // <-- learning magic happens here

state[Math.floor(Math.random()*3)] += Math.random()*2-0.5;

}

brain.epsilon_test_time = 0.0; // don't make any more random choices

brain.learning = false;

// get an optimal action from the learned policy

var action = brain.forward(array_with_num_inputs_numbers);

Of course, there are many possible options you can set. have a look at the reinfocement learning demo rldemo.html inside demo/ folder, or see the Reinforcement Learning demo for more documentation.

For Reinforcement Learning you might also be interested in my other projects reinforcejs.