Philosophical Issues

"I would be glad to know your Lordship's opinion whether when my brain has lost its original structure, and when some hundred years after the same materials are fabricated so curiously as to become an intelligent being, whether, I say that being will be me; or, if, two or three such beings should be formed out of my brain; whether they will all be me, and consequently one and the same intelligent being."

— Thomas Reid letter to Lord Kames, 1775

One rather important philosophical dilemma that arises when we consider uploading consciousness is what it means to really be “alive.” We often look at science fiction and point out that robots, despite their capacity for intelligence, are not alive. If that is the case, suppose we do someday figure out a way to upload our minds to a digital medium, are these copies of the original mind really alive or are they just computer-created imitations? Another theory holds that energy is the principle definer of what it means to be alive. The Second Law of Thermodynamics says that in any process, the total amount of energy must increase. Life is intrinsically ties to regulation of energy flow—somehow life manages to avoid the equilibrium state when it comes to energy. Therefore, as long as any duplicates of the original mind still abide by the Second Law of Thermodynamics, they should still be considered to be “alive.” Naturally, this leaves room for much debate on both sides, but that is just the tip of the iceberg.

A huge philosophical issue with the concept of uploading or downloading consciousness comes into play when we consider whether the new digital mind would be the “same” as the original or just a duplicate. Sure, this duplicate would have all of the same memories, ideas, and personality, but does being a copy inherently make it “different” than the original? This dilemma becomes even more problematic as technology develops, so that the original remains essentially unaltered throughout the uploading process.

The Swampman thought experiment takes a closer look at this issue. In his 1987 paper, entitled, “Knowing One's Mind,” Donald Davidson ponders what would happen if by some bizarre occurrence he were to die in a swamp at the exact moment that a bolt of lightning spontaneously rearranges a bunch of atoms so that they took on Davidson's exact form. This would result in what Davidson called the Swampman. While the Swampman would look and behave exactly like the original, Davidson claims that it still remains fundamentally different. For instance, even though Swampman would recognize and be able to interact with all of Davidson's friends and family, he still has never actually met them before. This ties into the notion of semantic externalism. This is the view that the meaning of someone's words are determined by factors external to the speaker—meaning does not derive solely from the brain. Davidson refers to this issue as the Duplicate Problem.

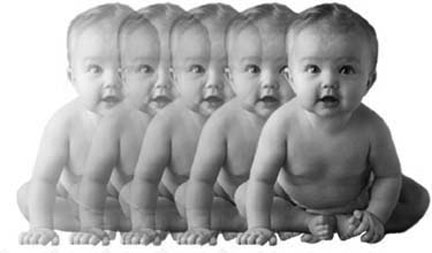

Another theoretical problem with the process of uploading consciousness is that most brain scanning technologies pose serious risks to the brain itself. Processes, such as serial sectioning of the brain, would be harmful, leaving the original brain damaged and fundamentally different. The question then arises as to what would occur if the original brain were somehow able to be kept 100% intact and unaltered. If this were the case then the computer-based consciousness would be an exact copy of the biological person. This opens up the possibility for one or more copies being created in the exact image of the original brain.

With the simultaneous existence of multiple copies of the same person it is assumed that they would eventually begin to develop new experiences as their paths diverged, despite the fact that up until the point of their creation they would be essentially the same as the original (i.e. memories, personality, feelings, etc.). This ultimately could result in countless complex variations.

These philosophical questions also have practical implications within the field of cyonics. The idea is that if a cryopreserved brain can become the basis for an entirely new brain or set of brains then it would raise the question as to whether or not the reconstructed brains were the same as the original.

Davidson proposes a Continuity Criterion as a solution to the aforementioned Duplicate Problem. According to the Continuity Criterion there can exist only one original. Davidson argues this case because any duplicates that were created could not possibly be in the same original location. Despite the fact that the “copies” may consider themselves to be the original, but he/she is not. This ties into the notion of continuity because the copies can never “be created in such a way as to have as much continuity-of-the-original as the original.” Because of this a duplicate can never acquire the identity of the original.

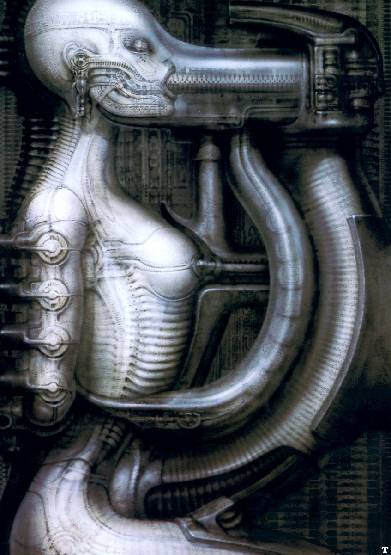

All of these concerns generally revolve around the brain itself, whether in its physical or digital state (i.e. as the original or as a duplicate). However, the philosophical issues surrounding uploading consciousness or mind downloading is just as heavily weighted in terms of the physiological makeup of these “duplicates.” While uploading consciousness only implies a digital copy of the original mind, the possibility of creating clones with the same memories, skills, experiences as the original person force us to consider the implications of the physical states of all instances of mind.