Object Discovery in 3D scenes via Shape Analysis

Andrej Karpathy, Stephen Miller, Li Fei-Fei

Abstract

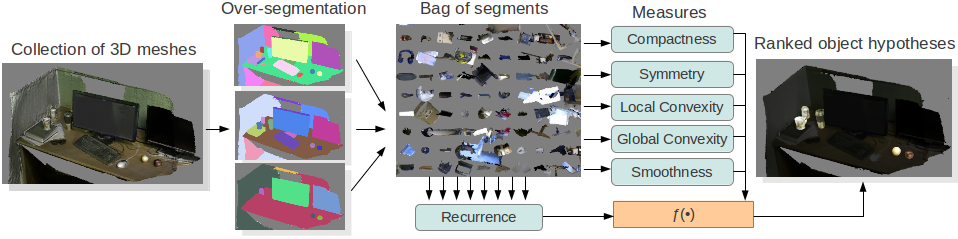

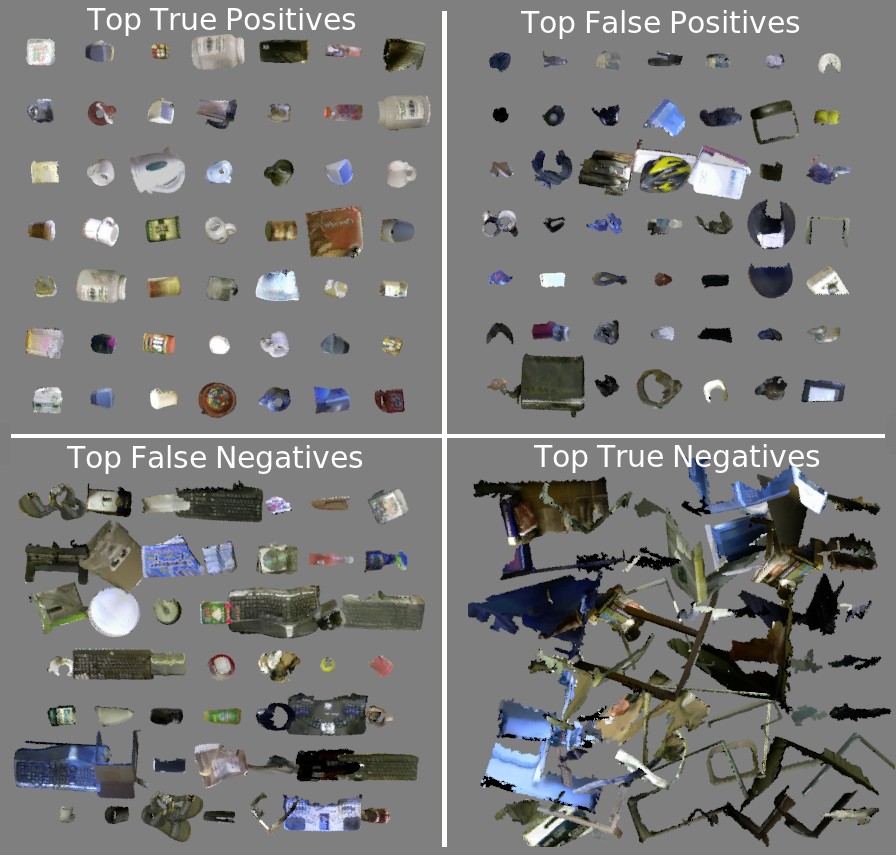

We present a method for discovering object models from 3D meshes of indoor environments. Our algorithm first decomposes the scene into a set of candidate mesh segments and then ranks each segment according to its "objectness" -- a quality that distinguishes objects from clutter. To do so, we propose five intrinsic shape measures: compactness, symmetry, smoothness, and local and global convexity. We additionally propose a recurrence measure, codifying the intuition that frequently occurring geometries are more likely to correspond to complete objects. We evaluate our method in both supervised and unsupervised regimes on a dataset of 58 indoor scenes collected using an Open Source implementation of Kinect Fusion. We show that our approach can reliably and efficiently distinguish objects from clutter, with Average Precision score of .92. We make our dataset available to the public.

full paper PDF

Code and Data

The code below reproduces the main figures of the paper. It is written in C++ and requires Point Cloud Library.- code (C++, Matlab, 0.8 MB)

-NOTE: The code doesn't compile anymore, but here are instructions from a friend on the few needed changes.

-NOTE 2: The annotations for object/nobject are not included (because it would require us to release the intermediate object segments as well, which was several gigabytes), but the included annotation interface can be used to label the data yourself in less than 5 minutes.

- .ply colored mesh files dataset (58 scenes, 178.4 MB)

Above: 6 examples of scenes in the dataset

Pretty results pictures

Bibtex

@inproceedings{Karpathy_ICRA2013,

author = "Andrej Karpathy and Stephen Miller, and Li Fei-Fei",

title = "Object Discovery in 3D Scenes via Shape Analysis",

booktitle = "International Conference on Robotics and Automation (ICRA)",

year = "2013",

}

Acknowledgment

This research is partially supported by an Intel ISTC research grant. Stephen Miller is supported by the Hertz Foundation Google Fellowship and the Stanford Graduate Fellowship.