I was playing around with a state of the art Object Detector, the recently released

RCNN by

Ross Girshick. The method is described in detail in this

arXiv paper, and soon to be a CVPR 2014 paper. It takes an ImageNet pretrained Convolutional Network of

Krizhevsky et al. (i.e. the paper that rocked computer vision last year) and fine-tunes the network on

PASCAL VOC detection data (20 object classes, and 1 background class). At test time RCNN uses

Selective Search to extract ~2000 boxes that likely contain objects and evaluates the ConvNet on each one of them, followed by non-maximum suppression within each class. This usually takes on order of 20 seconds per image with a Tesla K40 GPU. RCNN uses

Caffe (a very nice C++ ConvNet library we use at Stanford too) to train the ConvNet models, and both are available under BSD on Github.

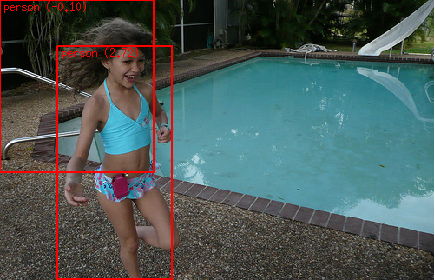

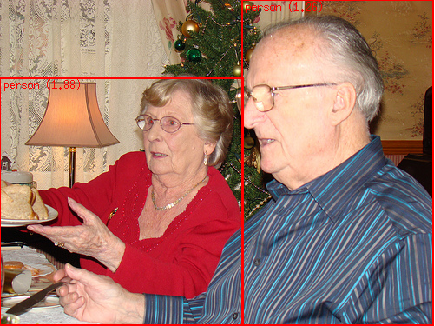

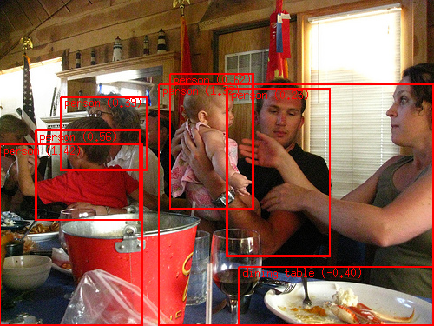

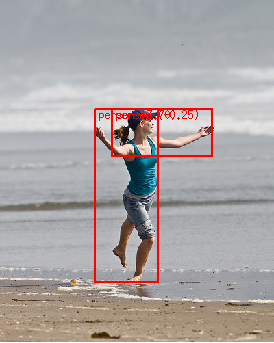

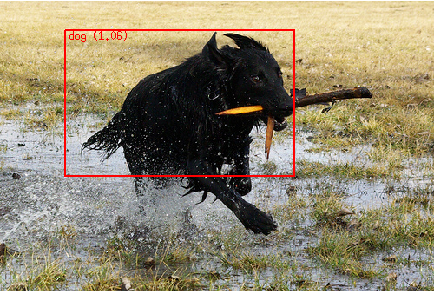

Below are some example results of running RCNN on some random images from Flickr. Keep in mind that the training data in PASCAL VOC contains only 20 classes (Aeroplanes, Bicycles, Birds, Boats, Bottles, Buses, Cars, Cats, Chairs, Cows, Dining tables, Dogs, Horses, Motorbikes, People, Potted plants, Sheep, Sofas, Trains, TV/Monitors), examples of the training data can be found

here. The number in the bracket next to every detection is the SVM score for that class (high = more confident) and I only display detections with score > -0.5. Also keep in mind that these are raw SVM scores, so technically they aren't exactly comparable across classes.