Abstract

We present the MAC network, a novel fully differentiable neural network architecture, designed to facilitate explicit and expressive reasoning. Drawing inspiration from first principles of computer organization, MAC moves away from monolithic black-box neural architectures towards a design that encourages both transparency and versatility. The model approaches problems by decomposing them into a series of attention-based reasoning steps, each performed by a novel recurrent Memory, Attention, and Composition (MAC) cell that maintains a separation between control and memory. By stringing the cells together and imposing structural constraints that regulate their interaction, MAC effectively learns to perform iterative reasoning processes that are directly inferred from the data in an end-to-end approach. We demonstrate the model's strength, robustness and interpretability on the challenging CLEVR dataset for visual reasoning, achieving a new state-of-the-art 98.9% accuracy, halving the error rate of the previous best model. More importantly, we show that the model is computationally-efficient and data-efficient, in particular requiring 5x less data than existing models to achieve strong results.

The MAC Network

The MAC network is an end-to-end differentiable model that performs an explicit multi-step reasoning process. Given a knowledge base (e.g. an image) and a task description (e.g. a question), the model infers a decomposition into a series of p reasoning operations that iteratively aggregate and manipulate information from the knowledge base to perform the task at hand.

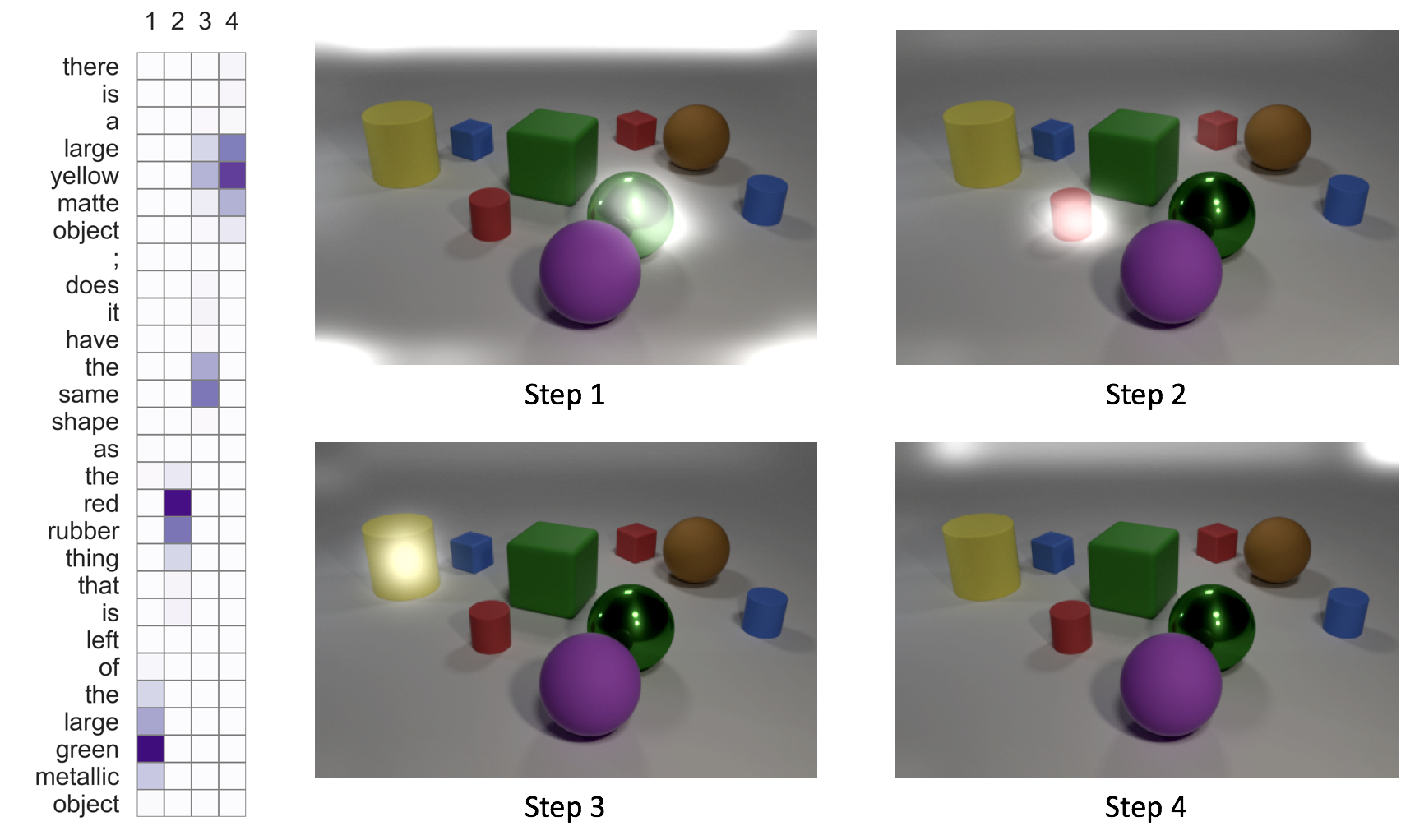

We can see a visualization of the MAC's reasoning process for the question: "There is a large yellow matte object; does it have the same shape as the red rubber thing that is left of the large green metallic object?" from the CLEVR dataset. The model correctly tracks the relevant object and the transitive connection between them, iteratively computing the final answer: Yes.

The Model Architecture

The model consists of three components:

The input unit transforms the raw image and question into distributed vector representations.

The core recurrent network reasons sequentially over the question by decomposing it into a series of operations (control) that retrieve information from the knowledge base and aggregate the results into a recurrent memory.

The output classifier computes the final answer using the question and the final memory state.

The MAC cell

The MAC recurrent cell consists of a control unit, read unit, and write unit, that interact with dual (control) and memory hidden states.

The control unit attends to different parts of the task description, updating the control state to represent at each iteration the reasoning operation the cell intends to perform.

The read unit extracts information out of a knowledge base (image), guided by the control state.

The write unit integrates the retrieved information into the memory state, yielding the new intermediate result that follows from applying the current reasoning operation.

@inproceedings{hudson2018compositional,

title={Compositional Attention Networks for Machine Reasoning},

author={Hudson, Drew A and Manning, Christopher D},

booktitle={International Conference on Learning Representations (ICLR)},

year={2018}

}

Download paper (arXiv)

Presented at ICLR 2018Acknowledgments

This work is supported by the

Defense Advanced Research Projects Agency (DARPA) Communicating with Computers (CwC) program.